- Warns of Civilisational risks

- Turns to Poetry Full-time

- A Sudden Resignation

Article Today, London:

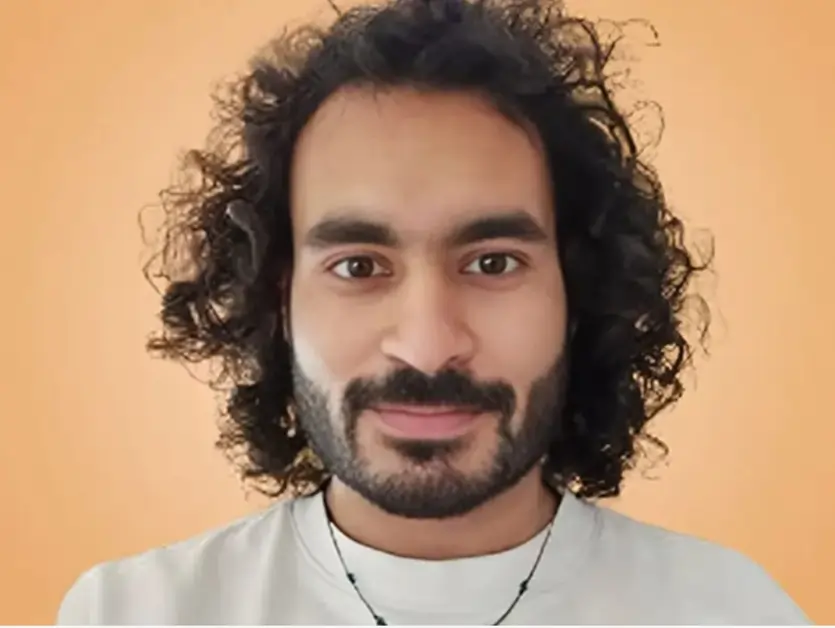

Mrinalk Sharma, a senior AI safety researcher at Anthropic, has resigned from his position. He has walked away from a high-paying role to pursue poetry in the United Kingdom. The decision has triggered debate within technology and policy circles. Sharma has argued that the world is moving closer to systemic risk due to rapid advances in artificial intelligence.

Warning on Unchecked AI

Sharma specialised in AI safety research. His work focused on long-term risks posed by advanced models. He has stated that technical capability is scaling faster than ethical oversight. According to him, society lacks adequate regulatory and moral frameworks to govern powerful AI systems. Therefore, he believes the risk of misuse is rising.

Corporate Contradictions

Anthropic positions itself as a safety-focused AI company. It develops large language models under the Claude brand. The firm has publicly emphasised responsible deployment and alignment research. However, Sharma has suggested that commercial pressures increasingly shape institutional priorities. He has indicated that internal tensions exist between safety commitments and market competition.

Competitive Pressures

The AI sector is witnessing intense rivalry. OpenAI and other firms are expanding model capabilities at a rapid pace. Meanwhile, investor expectations remain high. Revenue models, including enterprise contracts and platform monetisation, are becoming central to strategy. In such an environment, safety research may face structural constraints. Sharma has implied that these pressures weaken ethical safeguards.

Biosecurity Concerns

In addition, Sharma has raised concerns about biosecurity. He has studied scenarios where AI tools could assist malicious actors in designing harmful biological agents. He has not alleged current misuse. However, he has argued that accessibility and model capability lower technical barriers. Therefore, he believes stronger international oversight is essential.

Shift to Literature

Sharma has said he will now focus on poetry and literary study in the UK. He has cited the limitations of purely technical responses to existential risk. In his view, cultural and moral imagination must accompany scientific progress. He has referred to European literary traditions as a source of ethical reflection. However, he has not outlined a formal policy platform.

Broader Debate

His resignation has added to an ongoing debate about AI governance. Policymakers in the US and Europe are discussing regulatory frameworks. Companies continue to release more capable models. Meanwhile, civil society groups demand transparency and accountability. Sharma’s departure underscores a growing unease within sections of the research community.

The episode reflects a wider tension. Advanced AI promises productivity and innovation. At the same time, it raises structural risks that remain insufficiently addressed. Sharma’s move from code to poetry may be symbolic. However, the questions he has raised remain unresolved.